BBC’s Deepfake Experiment Exposes the Danger—How ValidSoft Detects the Threat

A recent BBC article, Is There Something Special About the Human Voice? explored the increasing difficulty of differentiating between human voices and AI-generated synthetic voices. The experiment they conducted provided a fascinating lens into this issue, underscoring the challenges individuals and organizations face in identifying deepfakes.

As part of the study, the chief AI architect from New York University’s Stern School of Business created two audio clips: one featuring his natural voice and the other generated by ElevenLabs’ voice cloning technology. The clips, both featuring a passage from Alice in Wonderland, were played to a group of participants.

BBC Test of Perception: Human vs. Deepfake

The two clips, a passage from Lewis Carroll’s Alice in Wonderland, were played to a number of people with around 50% unable to discern which was human and which was deepfake. Whilst various participants had different theories around what they were listening for, including irregular pauses, variations in volume, cadence, and tone, as well as inflection and breathing, generative AI engines will be able to overcome any of these potential giveaways in time, if they have not already.

Another point to make about this exercise is the participants knew one clip was a deepfake, so they were actively trying to discern any perceived abnormality in the audio. In the real world , unlike the controlled environment of the BBC study, where participants were primed to identify a deepfake, real-world scenarios are far less predictable. Individuals on a phone call or in a virtual meeting/conference call are rarely on high alert for synthetic voices. This lack of vigilance makes detecting deepfakes even more challenging and increases the risk of fraudulent activities, from social engineering scams to financial fraud.

The Real-World Implications

However, the real challenge for organizations looking to detect fraud vectors based on deepfakes, apart from a reliable and accurate identification method, is scalability, the requirement to monitor every interaction. This precludes the use of employees being used as deepfake detectors, even if they could semi-reliably identify deepfakes, which the results of the BBC exercise say they couldn’t.

ElevenLabs, the creator of the Alice clip, can provide a tool that identifies deepfakes created by its own software, but they are only one of any number of deepfake creation engines. A strategy of detection algorithms or watermarking being provided by every deepfake generator is not realistic or viable.

An AI-Powered Solution

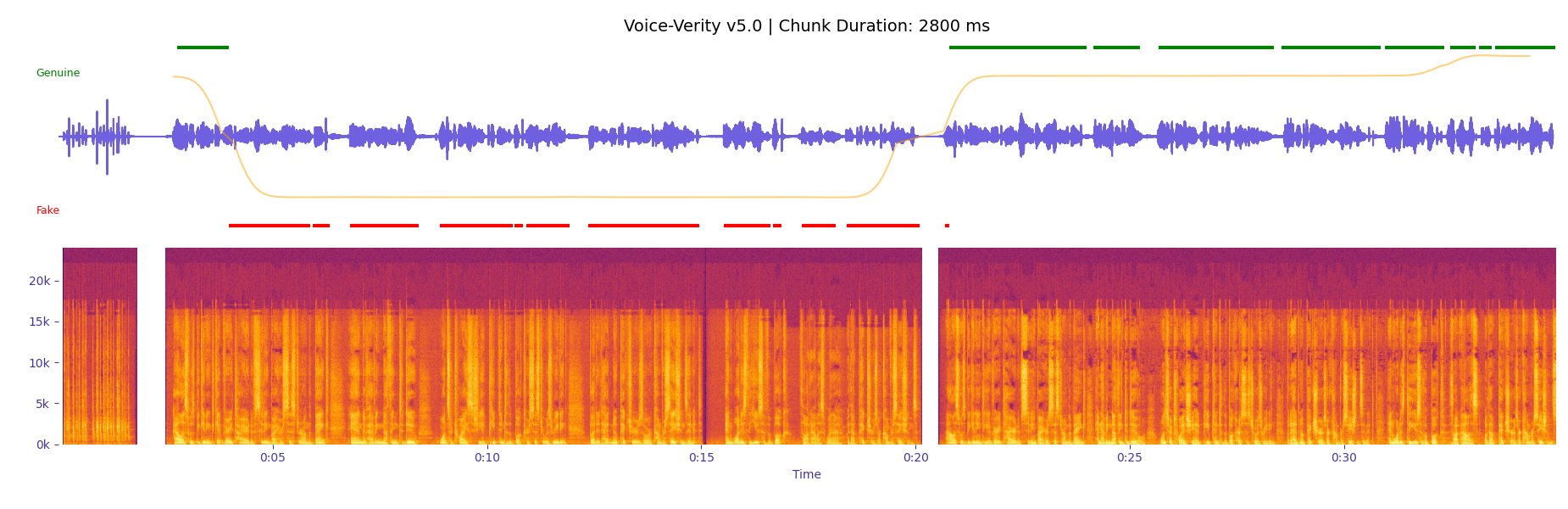

The only way to provide the necessary scale and high reliability is with AI itself. The image below shows the results from inputting the Alice in Wonderland audio clips into ValidSoft’s standalone deepfake detection engine Voice Verity®.

Clip 1, as explained in the BBC article, is the deepfake, and as can be seen above, the continuous line goes from fake to genuine once the second clip starts playing. Voice Verity® is a non-biometric AI solution based on large-scale Deep Neural Network (DNN) techniques. It requires no enrolment, no consent, and no PII. Operating in both real-time and as a background process, it can be integrated into any channel, from contact centers, IVRs, and IVAs to browser-based applications. It is language agnostic and truly generic in terms of detection for all Generative AI audio engines, both current and, critically, future.

The BBC experiment serves as a wake-up call for organizations across industries. As the boundaries between human and synthetic audio blur, relying on human perception alone is no longer sufficient. Scalable, AI-powered solutions are essential for staying ahead of these emerging threats.

To detect AI-generated audio effectively, accurately, and at scale requires an AI-based solution and Voice Verity® can prevent organizations from, unlike Alice, going down rabbit holes.

Test your knowledge, can you detect the deepfake audio in the clip?