Sir David Attenborough’s Voice and the Rise of AI Cloning: An Iconic Voice Under Siege

By Dr Benoit Fauve, Chief R&D Officer

Sir David Attenborough is more than just a broadcaster and natural historian; he’s a voice that generations have grown up with, a calm and authoritative narrator of nature’s wonders. Dubbed a “national treasure” by fans and media alike (though he himself resists the term), Attenborough’s voice is an integral part of his identity and work. In the digital age, however, even a voice as iconic as his isn’t safe from exploitation. Recent developments in AI cloning have led to the unauthorized use of his voice, raising questions about ethics, legality, and the impact of such technology on both creators and audiences.

Sir David Attenborough: The Voice Heard Around the World—and on Social Media

Attenborough’s voice is so distinctive that it has become a cultural meme. The phrase “in Attenborough’s voice” often pops up on social media, humorously paired with observations about life’s oddities, like pigeons battling over a chip on the street—or humans in unfortunate situations. The meme reflects a broader cultural affection for the broadcaster.

Developers, empowered by tools like ChatGPT’s Vision API and text-to-speech software like ElevenLabs, have taken the meme a step further. Some examples are harmless and comedic, such as this viral post on X from last year, where a developer combined AI tools to create a spoof documentary on mundane office life. But as technology spreads, its darker side is becoming harder to ignore. It’s all in good fun—until it isn’t.

When Imitation Becomes Exploitation

The problem escalates when AI-generated voices are used for more nefarious purposes.

The BBC recently reported on the unauthorized use of Sir David Attenborough’s voice in AI-generated content. The broadcaster highlighted how his voice is being manipulated to say things he never would, without his consent. This misuse goes beyond harmless imitation, crossing into a realm of ethical and potentially legal violations.

In response to concerns raised by the BBC’s report, the team at ValidSoft conducted a deeper analysis of various online news platforms, including The Intellectualist, a news platform with over 200,000 followers on X. The ValidSoft team discovered content created from AI tools like ChatGPT, paired with synthetic voices designed to sound like Attenborough. These tactics may be used to create an air of authority and increase user engagement.

While this specific platform often covers political topics, similar approaches are observed across the spectrum of online media, from left-leaning outlets to conservative sites, all seeking to maximize attention through sensationalized content. ValidSoft’s analysis suggests that in this case, the synthetic voice content has likely been produced using AI voice generation tools from ElevenLabs.

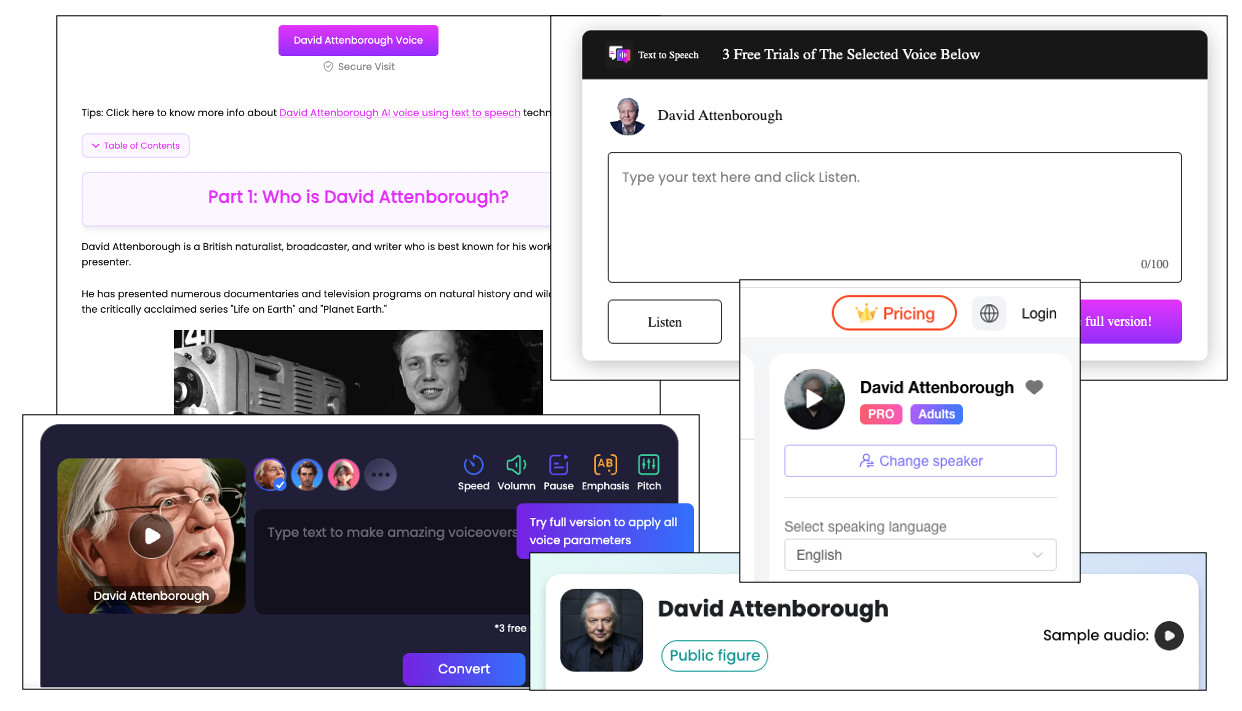

Adding to the issue, several websites now offer text-to-speech (TTS) services explicitly mimicking Sir David Attenborough’s voice. Some even include his biography, blatantly leveraging his fame, while others present vague offerings like “English Male Voice,” paired with images clearly designed to evoke his likeness.

What’s especially troubling is the method some services employ to train these models. Source tracing by ValidSoft reveals a troubling cascade: users clone a celebrity voice using a paid service such as ElevenLabs, generate large amounts of synthetic audio, and then use that output to train open-source TTS models. These models are later monetized behind paywalls. This cycle creates a feedback loop where stolen material perpetuates further theft.

The Legal and Ethical Quagmire

In the UK, the unauthorized use of someone’s voice or likeness for commercial purposes exists in a legal grey area. Copyright law may protect original recordings, but it hasn’t fully caught up to the complexities of AI-generated voice clones.

One potential avenue is the doctrine of passing off, where someone falsely represents a product as being endorsed by another. However, this requires proving that someone has a reputation or ‘goodwill’ in their voice (easy enough for Attenborough, but perhaps not for less well-known persons), as well showing that the usage constitutes a misrepresentation causing financial damage, which can be challenging in cases involving AI impersonation.

For public figures like Sir David Attenborough, the emotional and reputational distress is undeniable. In a recent BBC interview, he expressed his dismay, saying:

“I am profoundly disturbed to find that these days my identity is being stolen by others and greatly object to them, using it to say whatever they wish.”

For someone whose career has been built on authenticity and trust, the unauthorized misuse of his voice to spread fabricated, fraudulent, or trivial content is profoundly upsetting.

The Role of Detection and Accountability

Despite the increasing prevalence of AI-generated voices, detection tools, available today, remain underutilized. As a consequence, enforcement remains lax, leaving creators vulnerable. Social media and content platforms could play a much more proactive role by taking the initiative to protect creators by deploying such tools to screen uploads in real-time and check/flag/deny synthetic content.

For celebrities and other public figures, this lack of accountability compounds the problem. Their voices are not just tools—they are extensions of their identity, trust, and legacy, and must be protected.

A Call for Ethical AI Usage

While AI has the potential to revolutionize creativity and accessibility, its misuse presents significant challenges. Sir David Attenborough’s voice—a symbol of wisdom and environmental stewardship—should not become fodder for clickbait or a novelty for unscrupulous TTS services.

To prevent further misuse, a multi-faceted approach is needed. Laws should be updated to address unauthorized voice cloning explicitly. Platforms and developers must prioritize ethical boundaries, and detection tools should be more widely adopted to protect creators.

ValidSoft reached out to Dr. Jennifer Williams, who appeared in the BBC segment, for further comments and insights on the issue. She emphasised:

“Because speech synthesis has so many other applications in society, such as helping people speak who have lost their voice due to a medical condition, it’s important to approach this problem of deepfakes responsibly. Outright banning the entire scope of this technology, that already helps people in the UK participate in society, might not be the right approach. There are a lot of different stakeholders to consider in the ethical and responsible use of AI.”

As we reflect on the multi-faced nature of audio deepfakes—capable of both harm and benefit—it is crucial to respect the wishes of individuals like Sir David Attenborough, who have contributed so profoundly to our cultural and intellectual heritage. Let us ensure that their voices remain authentic, speaking only on their terms, and honouring the legacies they have carefully cultivated over a lifetime.